S3

Prerequisites for Configuring S3 Data Source

AWS Requirements

For private buckets, you'll need:

- An AWS account with permissions to read from the bucket

- S3 Bucket Name

- S3 Bucket Path

- S3 Bucket Region

If you are using STS Assume Role, you must provide the following:

- Role ARN

Otherwise, if you are using AWS credentials you must provide the following:

- Access Key ID

- Secret Access Key

If you are using an Instance Profile, you may omit the Access Key ID and Secret Access Key, as well as, the Role ARN.

Additionally the following prerequisites are required:

- Allow connections from InsightsIQ server to your AWS S3/Minio S3 cluster (if they exist in separate VPCs)

- An S3 bucket with credentials, a Role ARN, or an instance profile with read/write permissions configured for the host (ec2, eks)

- Enforce encryption of data in transit

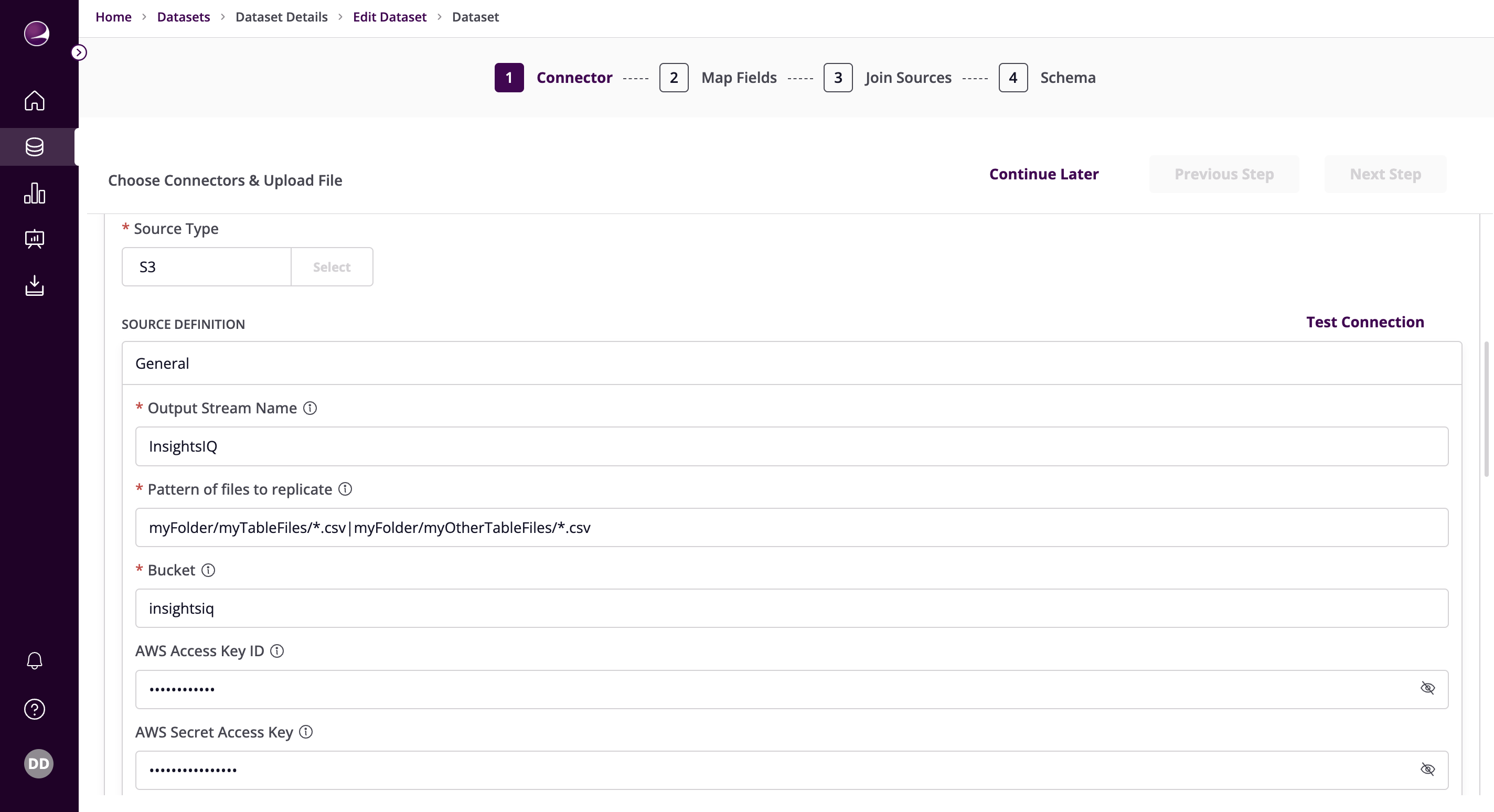

Configuring S3 Data Source

Select the Source Type as S3

Fill in the required details:

Output Stream Name - The name of the stream you'd like this source to output. Can contain letters, numbers or underscores.

Pattern of files to replicate - A regular expression which tells the connector which files to replicate. All files which match this pattern will be replicated. Use | to separate multiple patterns. Use pattern to pick up all files. Example: , myFolder/myTableFiles/.csv|myFolder/myOtherTableFiles/.csv

Bucket - Name of the S3 bucket where the file(s) exist

AWS Access Key ID - In order to access private Buckets stored on AWS S3, this connector requires credentials with the proper permissions. If accessing publicly available data, this field is not necessary.

AWS Secret Access Key - In order to access private Buckets stored on AWS S3, this connector requires credentials with the proper permissions. If accessing publicly available data, this field is not necessary.

Path Prefix - By providing a path-like prefix (e.g. myFolder/thisTable/) under which all the relevant files sit, we can optimize finding these in S3. This is optional but recommended if your bucket contains many folders/files which you don't need to replicate.

Endpoint - Endpoint to an S3 compatible service. Leave empty to use AWS.

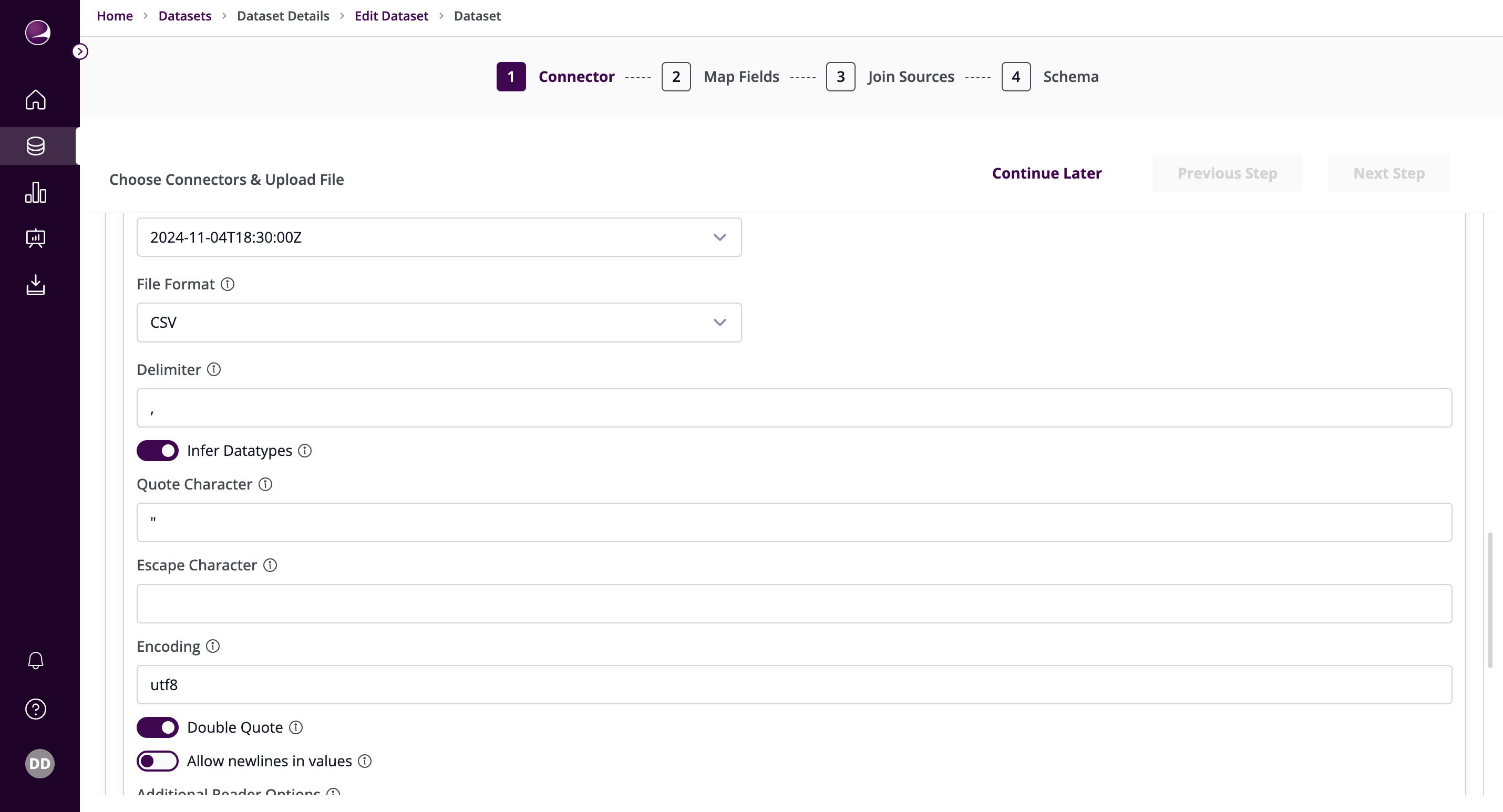

Start Date - UTC date and time in the format 2017-01-25T00:00:00Z. Any file modified before this date will not be replicated. Example: 2021-01-01T00:00:00Z

File Format - The format of the files you'd like to replicate

Delimiter - The character delimiting individual cells in the CSV data. This may only be a 1-character string. For tab-delimited data enter '\t'.

Infer Datatypes - Configures whether a schema for the source should be inferred from the current data or not. If set to false and a custom schema is set, then the manually enforced schema is used. If a schema is not manually set, and this is set to false, then all fields will be read as strings

Quote Character - The character used for quoting CSV values. To disallow quoting, make this field blank.

Escape Character - The character used for escaping special characters. To disallow escaping, leave this field blank.

Encoding - The character encoding of the CSV data. Leave blank to default to UTF8

Double Quote - Whether two quotes in a quoted CSV value denote a single quote in the data.

Allow newlines in values - Whether newline characters are allowed in CSV values. Turning this on may affect performance.

Additional Reader Options - Optionally add a valid JSON string to provide additional options to the csv reader.

Advanced Options - Optionally add a valid JSON string here to provide additional Pyarrow ReadOptions. Specify 'column_names' here if your CSV doesn't have header, or if you want to use custom column names. 'block_size' and 'encoding' if already used, specifying them again here will override the values there. Example: {"column_names": ["column1", "column2"]}

Block Size - The chunk size in bytes to process at a time in memory from each file. If your data is particularly wide and failing during schema detection, increasing this should solve it. Beware of raising this too high as you could hit OOM errors.

Manually enforced data schema - Optionally provide a schema to enforce, as a valid JSON string. Ensure this is a mapping of { "column" : "type" }, where types are valid JSON Schema datatypes. Leave as {} to auto-infer the schema. Example: {"column_1": "number", "column_2": "string", "column_3": "array", "column_4": "object", "column_5": "boolean"}

- Click on Test Connection to verify if the connection is established successfully.

Note: If test connection fails, check if the IAM role associated with the EKS cluster has access to the bucket.